Use 360 feedback to develop your people at scale

Transform sporadic employee feedback into a dynamic development ecosystem that empowers employees to drive their own growth while aligning with organizational objectives. Our intelligent scheduling and self-initiated 360-degree feedback capabilities ensure continuous, relevant insights throughout the employee lifecycle.

The world's

best brands

choose

Qualtrics

Today’s reality

Underperforming development processes

Despite standardizing employee development processes, employees do not feel they are getting the development opportunities they crave, resulting in turnover and disengagement

Losing top talent

Despite implementing professional development programs, organizations continue to lose top talent to competitors, leading to ongoing cycles of hiring and training that drain resources

Limited ability to measure impact

Outdated or fragmented systems complicate the ability to assess the long-term effects of employee development on employee retention and engagement

With Qualtrics 360 Development Feedback you can

- Access comprehensive 360-degree feedback through automated reports that compile and analyze input from multiple raters

- Send perfectly-timed feedback requests to the right people automatically, ensuring maximum response rates

- Empower employees to drive their own development through a self-service portal while reducing HR administrative tasks

- Close skill gaps instantly by connecting feedback results directly to relevant learning resources and training modules

- Build performance frameworks that precisely match your organization's goals with customizable competency templates

Supercharge your employees’ development without additional overhead

Build a 360-degree feedback program that enhances employee development across all levels of the organization. Enable HR leaders, managers, and employees to gather diverse feedback, helping them identify strengths, weaknesses, and areas for growth.

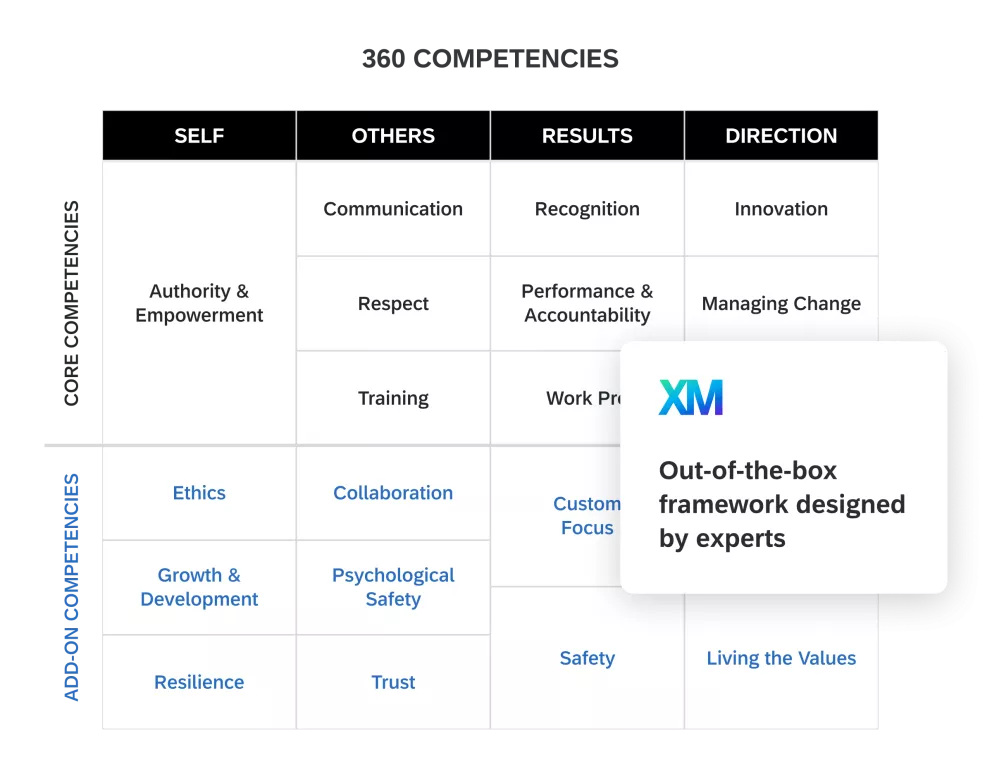

- Jumpstart your development program using our expert-created, out-of-the-box development framework for senior leaders, people managers and individual contributors

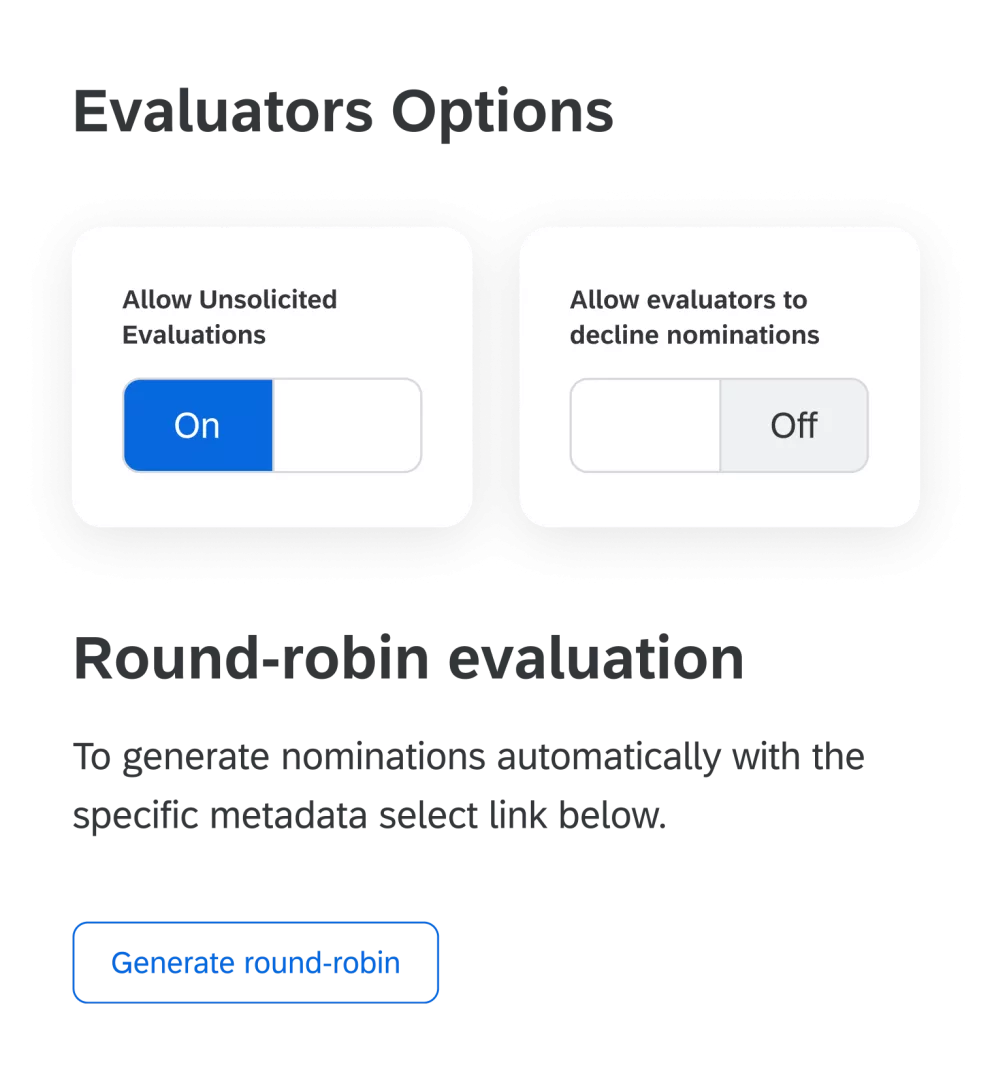

- Scale development programs more efficiently for more of your people, through automated nomination, evaluation and reporting workflows

- Enable employees to own more of the 360 processes and reduce dependency on HR teams with the intuitive participant portal

Illuminate performance patterns with data-driven intelligence

Turn vast amounts of 360 degree feedback data into clear, actionable intelligence that reveals both immediate opportunities and long-term talent trends across your organization. Our analytics engine connects the dots between individual capabilities, team dynamics, and organizational effectiveness to inform strategic talent decisions.

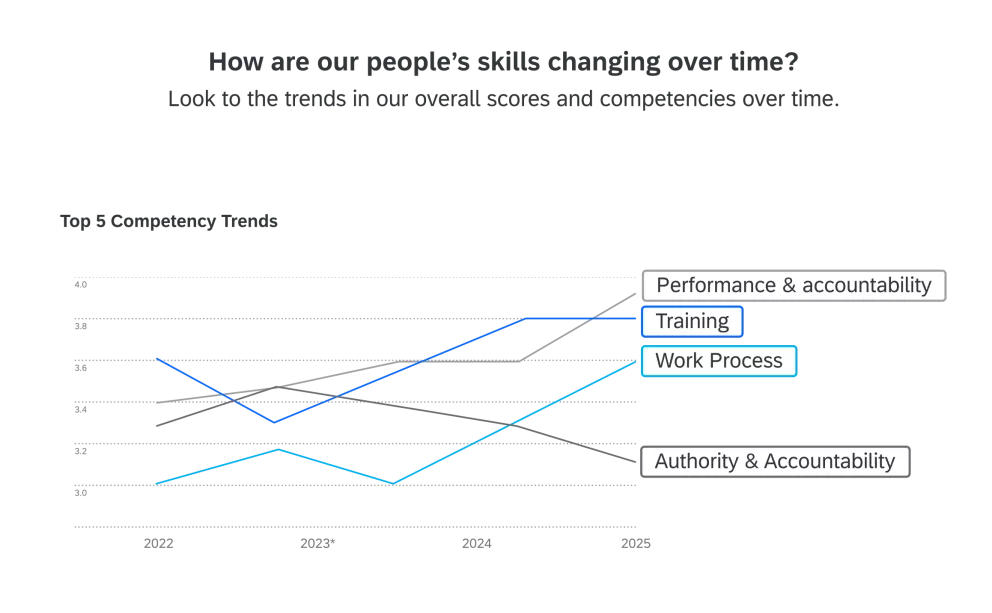

- Monitor organization-wide development progress in real-time through an interactive analytics dashboard that spotlights key trends

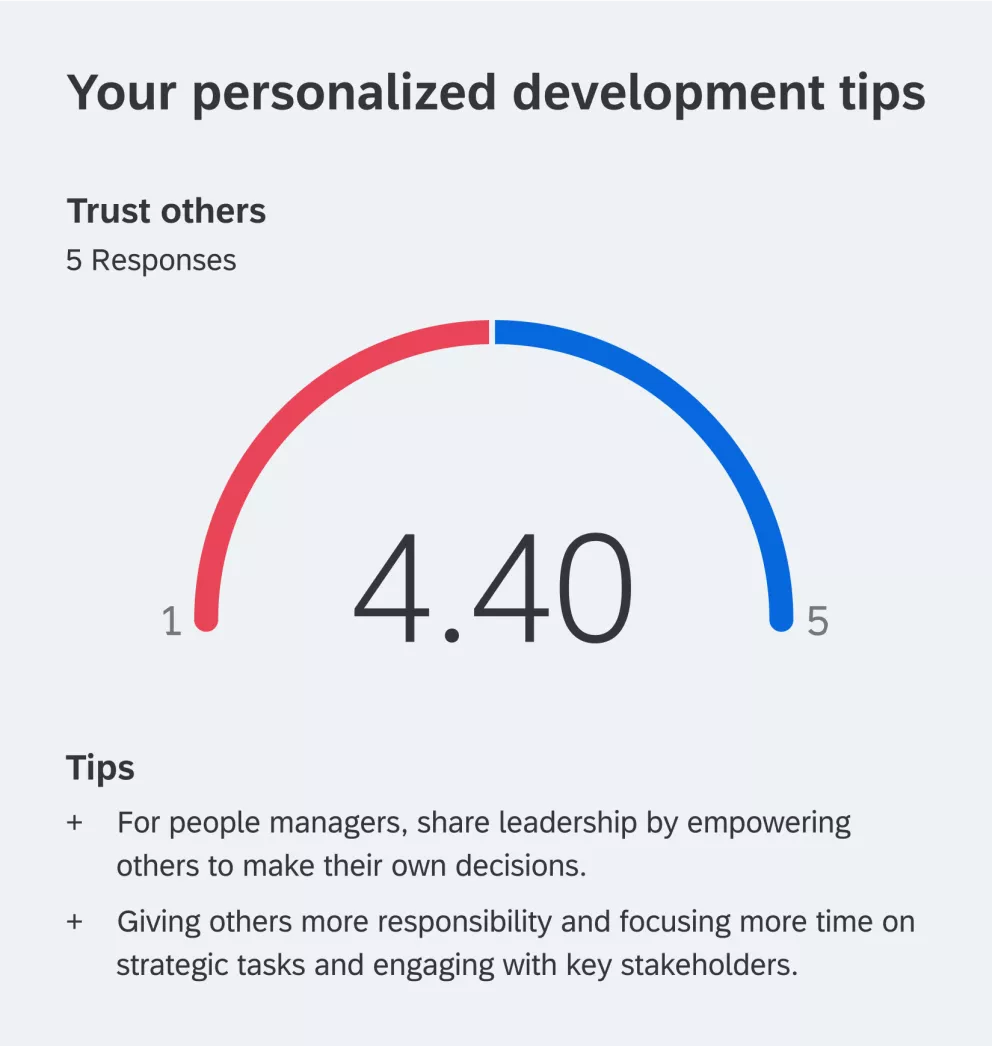

- Transform 360 feedback into actionable growth plans with personalized reports that outline specific next steps

- Track program effectiveness with longitudinal studies that measure impact on performance metrics over months and years

- Pinpoint precise skill gaps across departments, leadership levels, and functions to target development investments

- Uncover what makes top performers successful by analyzing integrated data from multiple sources to reveal key competency patterns

Ebook_

Employee lifecycle feedback: Understanding the moments that matter most to your employees

Employee lifecycle feedback: Understanding the moments that matter most to your employees

Learn how to get started with a lifecycle approach to employee experience that uses employee feedback at key stages in their journey with the organization to understand, and improve, the moments that matter most.

Drive business outcomes by connecting development results with KPIs

Bridge the gap between talent development investments and bottom-line results by directly connecting people development to business performance metrics. Our 360 feedback solution provides the quantitative evidence needed to optimize talent strategies and demonstrate clear return on development investments.

- Link development programs directly to business results by tracking how learning initiatives impact key performance metrics

- Transform insights into action through automated workflows that convert feedback into concrete operational improvements

- Make data-driven talent investment decisions using evidence-based analytics that prove ROI and optimize spending

How we are driving growth

How we are driving growth

Upgrade your programs with flexible, scalable plans

Upgrade your programs with flexible, scalable plans

360 Development Feedback FAQs

360 Development Feedback FAQs

What is 360 feedback?

What is 360 feedback?

360-degree feedback is a method of employee review that ensures every member of staff has the opportunity to receive development and performance feedback from their supervisor, manager and a set number of peers.

How does 360 feedback software work?

How does 360 feedback software work?

By taking a 360-degree approach to employee development and experience, organizations of all sizes can close talent gaps, increase organizational performance and bring teams closer together. For example, they can quickly extend development programs to more employees without additional administrative overheads, and deliver employee development experiences that best meet their organization’s unique needs.

What does 360 feedback software do?

What does 360 feedback software do?

Ultimately, with the right 360 feedback software, it becomes possible to analyze every stage of the employee journey, including the feedback shared, to devise truly effective action plans that bring out the most from your people and the business.

Who should use 360 feedback?

Who should use 360 feedback?

360 feedback is suitable for organizations of all sizes and industries. It can be particularly beneficial for managers, team leaders, and employees in roles requiring interpersonal or functional skills or leadership competencies.

More employee experience solutions

More employee experience solutions