Academic Experience

An introduction to MaxDiff analysis & design

Updated December 5, 2024

Hidden away at the bottom of the Matrix Table in the survey question options is the MaxDiff question type. This low-key entrance belies the usefulness and power of this feature in Qualtrics CoreXM. In this post, I will give you a researcher’s guide to what, when, why and how to use this questioning technique. It is important to acknowledge the developer of the MaxDiff technique, Professor Jordan Louviere, whose team at Centre for the Study of Choice (at the University of Sydney) are the world leaders in this technique.

Why should you be interested in MaxDiff?

To illustrate, I will use a tourism-related marketing problem and outline the differences we get using traditional rating scales compared to MaxDiff (by the way, this is based on my time working in the tourism research industry).

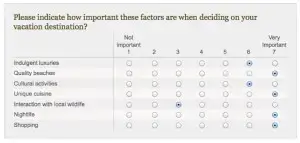

So, let’s say that my objective is to determine the two destination attributes (I don’t have a big marketing budget!) that are most important to my potential visitors when making their vacation choice. For this example: indulgent luxuries, quality beaches, cultural activities, unique cuisine, interaction with local wildlife, nightlife, and shopping.

Now of course, I can go ahead and ask respondents to rate the importance they place on the features I have identified (on our beloved 1 to 7 scales). However, this often results in heavily skewed data, with people generally placing reasonable to high importance on the majority of features (sorry wildlife but if you’ve seen one koala you’ve seen them all). After all, we haven’t asked them to make a trade-off, so they don’t. So, as I so often ran into, everything is important. Not a great deal of insight there, unfortunately.

Using the MaxDiff method however, we can get substantially more useful information (in subsequent posts I will explain the implementation and analysis of MaxDiff). The data we obtain through this method DOES require the trade-offs that we need, because we ask them to make choices. Hence we obtain not only greater discrimination between all of these appealing attributes, but the relative* degrees to which they are seen as important decision factors. So by being a little daring and different, we actually determine where to spend our resources, and we can be confident in our strategies.

What is Max Diff Analysis?

MaxDiff (otherwise known as Best-Worst) quite simply involves survey takers indicating the ‘Best’ and the ‘Worst’ options out of a given set. Implemented within an appropriate experimental design we can obtain a relative ranking for each option.

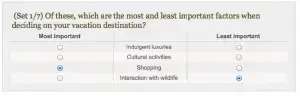

As a simple example, if I asked you to pick your most and least important factor (from our previous example) when choosing your next vacation destination, it could look something like this:

Now this is a very simple and quick decision to make, and now we know that Shopping > Luxury & Culture > Wildlife. The next set in the design would look like this, as you can see some similar items and also some new items.

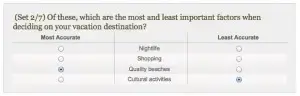

From this set we know that Beaches > Shopping & Nightlife > Culture. As the user progresses through the sets in the design, we get a fuller picture of what is most important, what is least important, and everything in between.

When and why should you use Max Diff??

What we are looking for here are situations where trade-offs or choices are required (so for instance, satisfaction surveys are generally not particularly well suited to this technique). If I want to know the relative preference for a type of soda, or the relative importance of various product or service attributes, then we are absolutely in business. Notably, this is an area when ratings scales often fail to truly differentiate, and researchers fail to notice!

* You will notice the word relative being used a lot. That is because the choice of attributes that the researcher makes constrains the information to be bound by these attributes. Thus this places the onus on the researcher to be both discerning and careful in which attributes go into the task…not a bad thing really.

You can learn more about survey analysis here, including about conjoint analysis, the cousin of MaxDiff.

Get Started with MaxDiff Using Our Automated XM Solution