Building ETL Workflows

About Building ETL Workflows

The Qualtrics Workflows platform contains a series of tasks to assist in importing data from third-party destinations into Qualtrics or exporting data from Qualtrics to third-party destinations. These tasks follow the Extract, Transform, Load (ETL) framework. Using ETL tasks, you can create automated and scheduled workflows to bring data from third-party sources into Qualtrics as well as export data from Qualtrics to third-party destinations.

To create an ETL Workflow, you must create 1 or more extractor tasks and 1 or more loader tasks. You are only limited by the overall limit for tasks in 1 workflow.

General Setup for Extractor and Loader Tasks

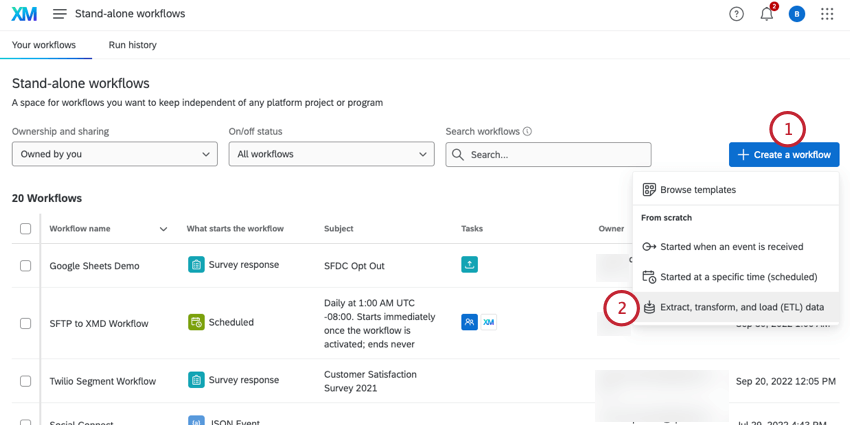

- From the stand-alone Workflows page or the Workflows tab in a project, click Create a workflow.

- Select Extract, transform, and load (ETL) data.

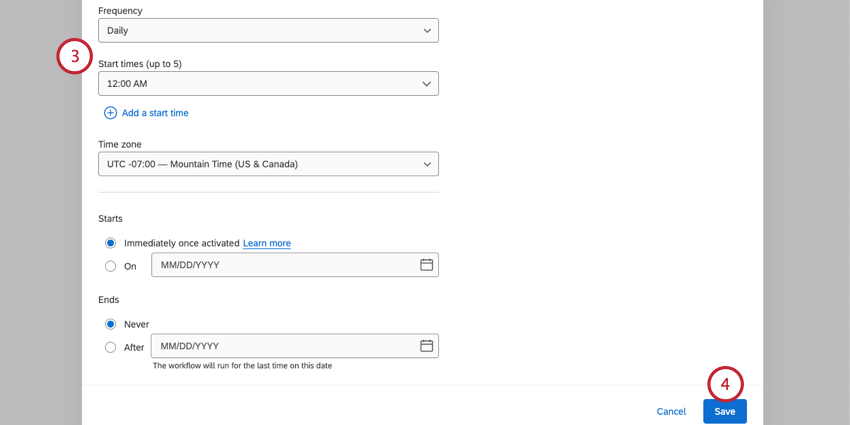

- ETL workflows usually run on a recurring schedule. Choose a schedule for your workflow. See Scheduled Workflows for more information about setting a workflow’s schedule.

- Click Save.

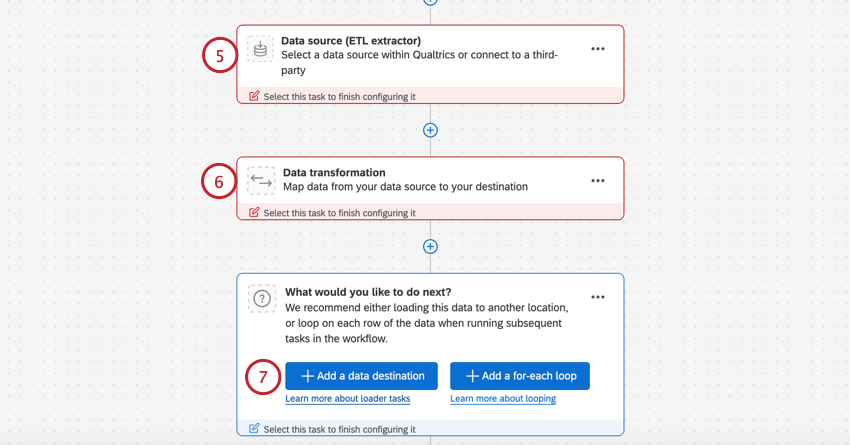

- Click Data source (ETL extractor) to choose an extractor task to use. See Available Extractor Tasks for a list of tasks you can use. You can add multiple extractors in 1 workflow.

- If you’d like to transform data before you load it, click Data transformation. This step is optional. See Basic Transform Task for more details.

- Click Add a data destination to choose a loader task to use. See Available Loader Tasks for a list of tasks you can use. You can add multiple loaders in 1 workflow.

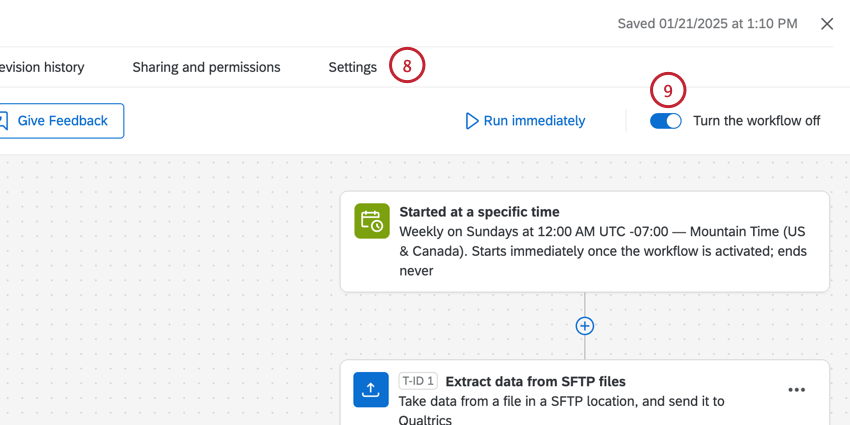

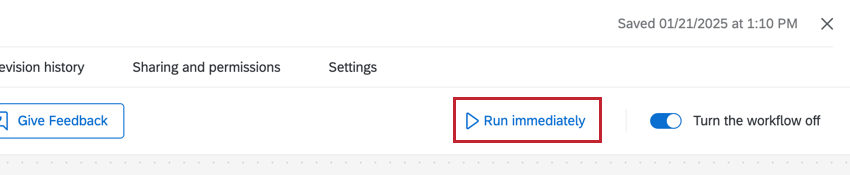

- This step is optional, but useful for alerting you if something goes wrong with your workflow. Go to Settings to set up workflow notifications to get notified if your workflow ever fails.

- Don’t forget to turn your workflow on.

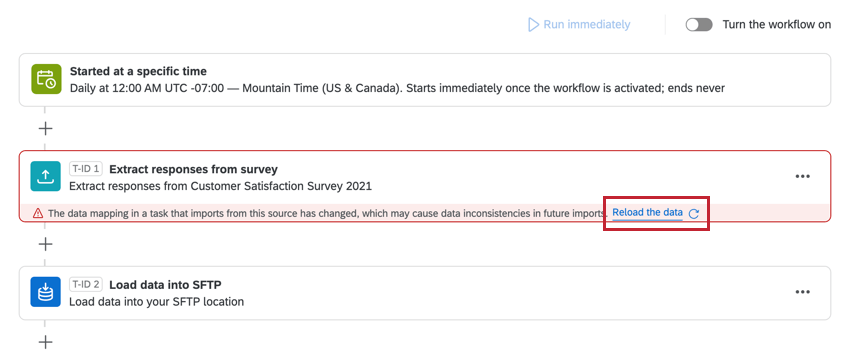

Reloading Data

When there’s been a configuration change between ETL tasks that depend on each other, a button will appear to reload all data with the new configuration. Click Reload the data if you’d like to reprocess the old data.

Available Extractor Tasks

Here are some of the extractor tasks available at this time:

- Extract data from Qualtrics File Service task: Take data you’ve stored to the Qualtrics File Service and save it elsewhere in the platform, such as XM Directory.

- Extract data from SFTP files task: Extract data from your SFTP server and import it into Qualtrics.

- Extract data from Salesforce task: Extract data from your Salesforce instance and import it into Qualtrics.

- Extract data from Google Drive task: Extract data from your Google Drive account and import it into Qualtrics.

- Import Salesforce report data task: Extract data from a Salesforce report to import into Qualtrics.

- Extract responses from a survey task: Extract data from your Qualtrics surveys and upload it elsewhere, such as an SFTP.

- Extract employees from HRIS task: Extract employee data from popular HRIS like Workday to import into your Qualtrics EX directory.

- Extract data from data project task: Extract data from your Qualtrics imported data project and upload it elsewhere, such as your own external database.

- Extract employee data from SuccessFactors task: Extract employee data from your SuccessFactors instance and import it into Qualtrics.

- Extract recruiting data from SuccessFactors task: Extract your recruiting data from SuccessFactors to import into Qualtrics.

- Extract data from Snowflake task: Extract data stored in Snowflake to import into Qualtrics.

- Extract data from Amazon S3 task: Extract data stored on Amazon S3 to import into Qualtrics.

- Extract run history reports from workflows task: Extract reports about your past workflow runs to import elsewhere, such as an SFTP.

- Extract data from tickets task: Extract Qualtrics tickets data to import into another source, such as an SFTP.

- Extract data from Discover task: Extract data from your Discover account and import it into Qualtrics.

Available Loader Tasks

Here are some of the loader tasks available at this time:

- Load B2B account data into XM Directory task: Save imported data to your XM Directory.

- Add contacts and transactions to XMD task: Save imported data and transactions to your XM Directory.

- Load users into EX directory task: Save imported employee data in your EX directory or EX project.

- Load users into CX directory task: Save imported CX user data.

- Load data into a data project task: Save imported data from a data extractor task into an imported data project.

- Load into a data set task: Save imported data into a dataset to use with a merge task.

- Load data into SFTP task: Save imported data to a SFTP server.

- Load data to Amazon S3 task: Save imported data to an Amazon S3 bucket.

- Load responses to survey task: Save imported data into a Qualtrics survey dataset.

- Load to SDS task: Save imported data into a Qualtrics supplemental data source.

- Load data to location directory task: Save imported data into a Qualtrics location directory.

Available Data Transformation Tasks

The following tasks are available to you for transforming the data you process in your ETL workflows:

- Basic transform task: Change the format of strings and dates, calculate the difference between dates, perform math operations on numeric fields, and more.

- Merge task: Combine multiple datasets into a single dataset.

Troubleshooting Data Extracting and Loading Tasks

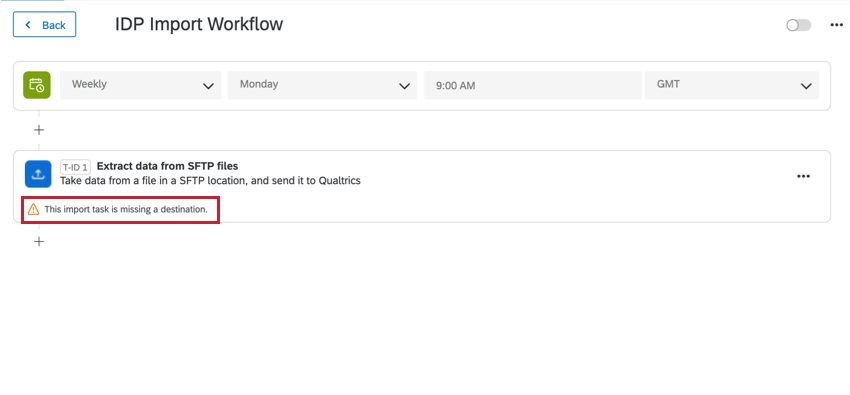

Incomplete Workflows

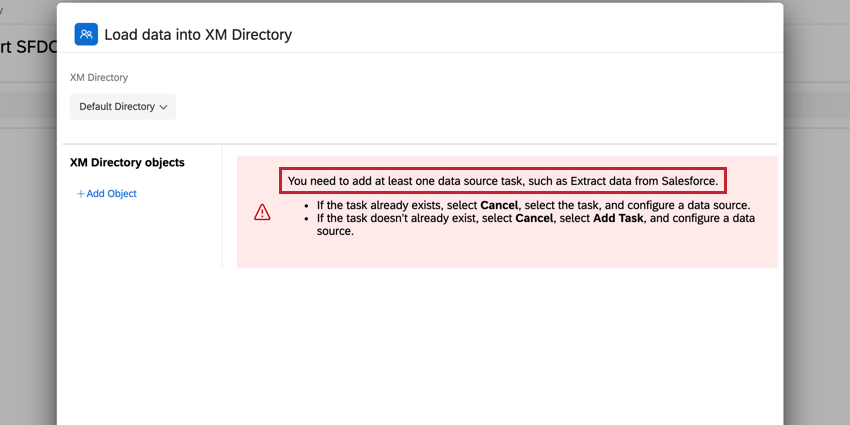

Data extractor and loader tasks must be used together. If you are missing a piece of their setup, the workflows editor will alert you.

Workflows Failing

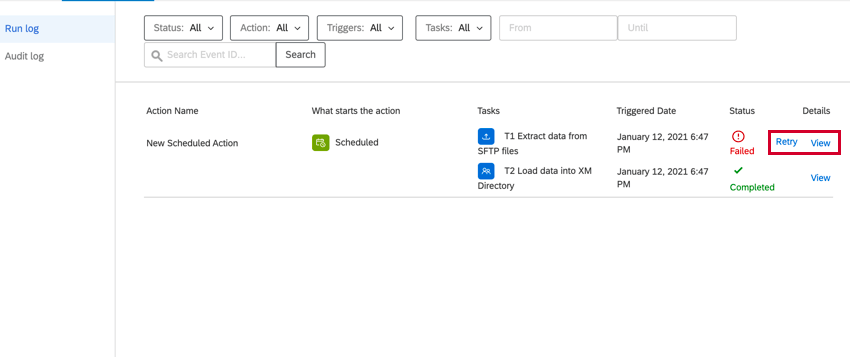

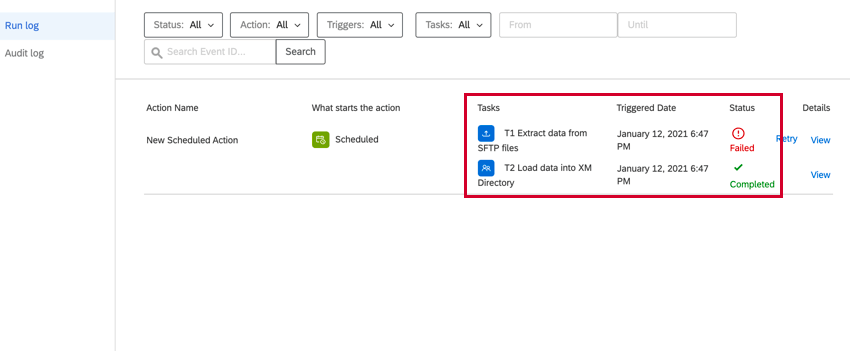

If your tasks are failing or not firing properly, the first place you should look is Workflows reporting & history. This will contain information about every time your workflow fired and the results of that workflow.

In reporting & history, each piece of your workflow will have its own entry, making it easy to pinpoint where things went wrong.

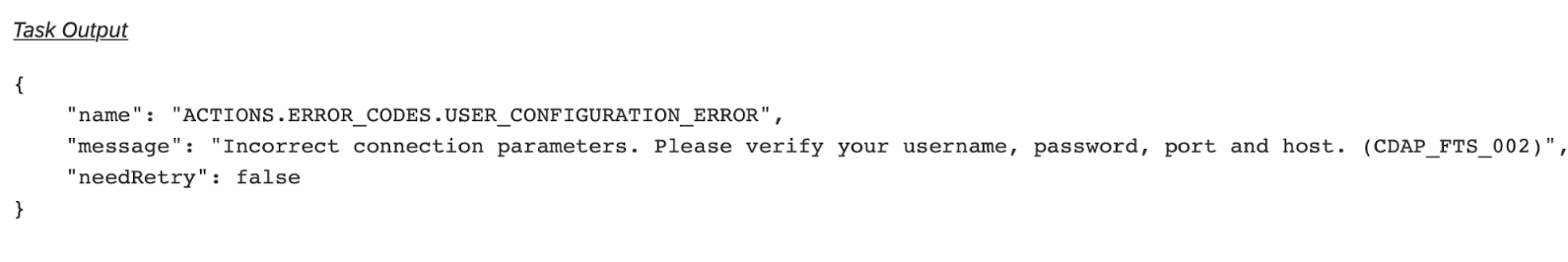

After identifying the problem, you can click View under Details to see more information to help you diagnose how to fix the problem. This will pull up the JSON payload for the task. Scroll down to the Task Output section to find any errors.

After editing your workflow and fixing the problem, you can return to reporting & history and click Retry to rerun the workflow.